The term transds might appear unusual at first glance, yet it encapsulates a set of distinctive concepts that deserve clarity and attention. In this article, you’ll gain a comprehensive understanding of what transds are, how they function, and why they matter across various domains. We’ll explore applications, advantages, challenges, and practical guidance.

What are transds?

At its core, tran-sds refers to transformational datasets—or transformations of data—that bridge raw information and actionable insights. The term can apply in fields ranging from machine learning preprocessing to data migration processes in enterprise systems.

In machine learning, for instance, transds might mean a structured pipeline that converts raw sensor readings into normalized, high-quality training input. In business IT, tran-sds might describe the staged transformation of legacy database records into a new schema. In any context, tran-sds stand for the transformation process and the result it yields.

Key Features of transds

Seamless data normalization with transds

One hallmark of effective transds is seamless normalization—making formats, scales, and conventions uniform so that downstream systems can reliably interpret the information. For example, a transds pipeline may convert disparate date formats into ISO 8601, adjust currency values to a base unit, or clean text by removing inconsistent special characters.

Efficient transformation process in transds

Efficiency defines high-quality transds implementations. Whether through in-memory operations or distributed computing, well-designed transds pipelines minimize processing time while preserving fidelity. Techniques such as vectorized operations, batch processing, and incremental updates often underpin efficient transds.

Transparency and traceability within transds

Another strength of transds lies in transparency. Good transds systems provide clear logging and lineage tracking, enabling users to trace each output back to its input. This traceability proves invaluable for debugging, audits, and ensuring compliance.

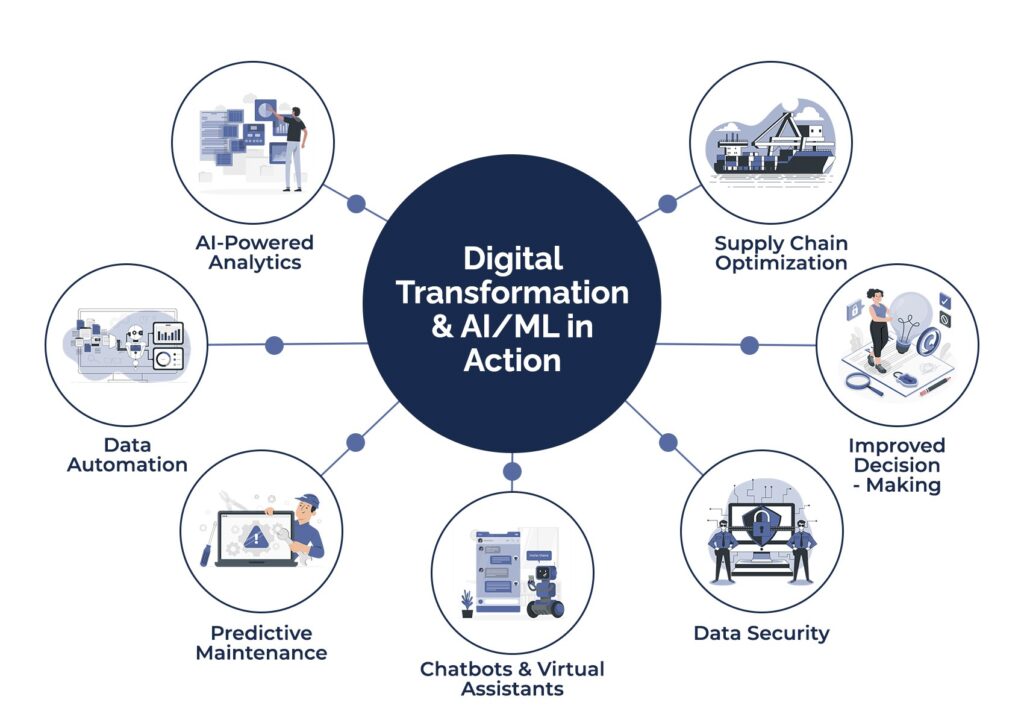

Applications of transds

Machine learning pipelines

In the realm of AI, tran-sds underpins the crucial step of preparing raw data for model training. This includes:

-

Cleaning missing or irregular entries

-

Scaling continuous variables

-

One-hot encoding categorical features

-

Generating derived features, such as rolling averages or normalized ratios

These tran-sds steps significantly impact model accuracy, robustness, and fairness.

Enterprise data migration using transds

Businesses migrating from legacy systems to modern platforms rely on transds to transform data structures. Migrations involve mapping old tables to new ones, converting field types (e.g., string to date), and reconciling inconsistent values. Well-planned tran-sds frameworks can minimize downtime and maintain data integrity during transitions.

Real-time analytics and streaming transds

In streaming analytics, tran-sds plays a vital role. Real-time data—think click streams, IoT signals, or financial ticks—must be transformed on the fly. Streaming tran-sds pipelines might normalize, filter, or enrich data before feeding dashboards or alert systems, ensuring insights arrive promptly and in correct form.

Benefits

Improved accuracy and data quality via transds

By standardizing and cleaning data, tran-sds reduces errors and inconsistencies. This yields more accurate insights, higher-quality machine learning models, and greater trust in business intelligence outputs.

Scalability and performance boosted by transds

Well-designed tran-sds that use batch or streaming frameworks—such as Apache Spark or Flink—scale effectively to large volumes. They preserve responsiveness and throughput even as data grows.

Enhanced governance through transds

Because tran-sds systems often include trace logs, lineage metadata, and validation checkpoints, they greatly support governance. You can audit each transformation, verify rules, and produce compliance reports based on transformation records.

Best Practices for Designing transds

Define clear transformation specifications

Effective tran-sds begins with clear, unambiguous specifications: what each source field means, how it must be transformed, and the target format. Specifications act as requirements documentation, guiding implementation and testing.

Modularize your transds pipeline

Break down tran-sds into modular components—parsing, cleaning, encoding, validating, and enriching. Modularity helps you isolate issues, reuse components, and adapt pipelines as requirements evolve.

Automate validation and monitoring in transds

Incorporate automated checks at each tran-sds stage. Use assert rules (e.g., “age must be between 0 and 120”) and monitoring of data volume or latency. Alerts help catch unexpected changes early.

Document and version control your transds

Treat tran-sds definitions—maps, scripts, transformation logic—as versioned artifacts. Documentation helps team members understand transformation history and rationale, while version control lets you rollback or compare versions.

Prioritize performance tuning in transds

Use efficient data processing frameworks and optimize bottlenecks. For example, avoid repeated full-data scans, use in-cache structures, and parallelize transformations. Benchmarking helps identify hot spots.

Challenges

Handling edge cases within transds

Unexpected input formats or rare data patterns often break tran-sds pipelines. Robust error handling and fallback strategies are essential—but planning for the unknown can be tough.

Balancing flexibility and stability

Designing tran-sds to handle new data types without introducing errors is a balancing act. If too rigid, they break with changes; if too loose, they may allow bad data to slip through. Finding the sweet spot is critical.

Managing complexity in large-scale transds

At scale, transds pipelines may involve many stages, multiple input sources, and diverse data structures. Complexity can hamper maintenance, slow development, and increase the risk of misconfigurations.

Real-World Example

Consider a retail enterprise undertaking a data modernization project. The goal: unify sales data from point-of-sale (POS) systems, web orders, and supply chain logs into a consolidated analytics warehouse. The company designed the following tran-sds process:

-

Ingestion: Collect data from multiple sources with adapters.

-

Normalization: Standardize date formats (ISO), currency units (USD), and product identifiers.

-

Cleaning: Remove duplicate rows, correct obvious typos, flag missing fields.

-

Enrichment: Join with product hierarchy, compute profitability metrics.

-

Validation: Confirm totals match source aggregates, check date ranges.

-

Load: Insert into analytics database (e.g., Redshift or Snowflake).

This transds process enabled unified dashboards for sales, accurate forecasting, and a scalable system for adding new input sources later. It improved reporting accuracy and sped up decision-making.

Tips for Getting Started

-

Start small: Begin by transforming one dataset end-to-end; test thoroughly.

-

Use existing tools: Leverage ETL/ELT frameworks like Apache NiFi, Airflow, Talend, or dbt. They handle orchestration, retry logic, and monitoring.

-

Incorporate schema evolution: Design to accommodate source changes, such as new fields or renamed items.

-

Engage stakeholders: Work with data producers and consumers to align transformations with business needs.

-

Iterate and improve: Treat transds as living processes—refine logic, add tests, measure performance, and respond to feedback

Frequently Asked Questions

What exactly does “transds” stand for?

In common use, tran-sds stands for transformational datasets or processes that convert raw, heterogeneous data into structured, clean, and actionable outputs.

Why is important for machine learning?

Because raw data often includes noise, inconsistencies, or skew, and tran-sds cleans and encodes data to ensure models learn from high-quality inputs. That improves model performance and reliability.

Can I reuse a pipeline across projects?

Yes! If your pipeline is modular and well-documented, components like normalization functions or validation rules can be reused across projects—enhancing consistency and saving effort.

What tools help implement transds effectively?

Popular choices include data workflow orchestration frameworks like Apache Airflow, ETL tools like Talend or Pentaho, modern ELT tools like dbt, streaming tools like Kafka Streams or Apache Flink, and cloud platforms like AWS Glue or GCP Dataflow.

How do you measure the success of a transds process?

Metrics include transformation accuracy (mapping verification), processing latency, throughput (rows per second), data quality indicators (e.g., missingness, anomaly rate), and operational stability (failure rates, downtime).

What’s the biggest risk in implementations?

The biggest risk is poor error handling leading to silent failures—e.g., missing or mis-transformed data entering analytics or models without detection. That undermines trust and corrupts results.

Conclusion

In today’s data-driven world, transds—transformational datasets and pipelines—play a foundational role. From enabling high-quality machine learning to ensuring accurate analytics for business intelligence, the structured transformation of raw data is essential. By embracing best practices—clear specifications, modular design, validation, documentation—you can build transds processes that are accurate, scalable, and trustworthy. Getting started may seem complex, but even a small, well-tested transds pipeline can deliver massive value through cleaner data and better insight.

Optimize your data workflows with thoughtfully designed transds, and you’ll lay a solid foundation for smarter decisions and more reliable systems—today and beyond.